Predicting whole-brain neural dynamics from prefrontal cortex functional near-infrared spectroscopy signal during movie-watching

Abstract

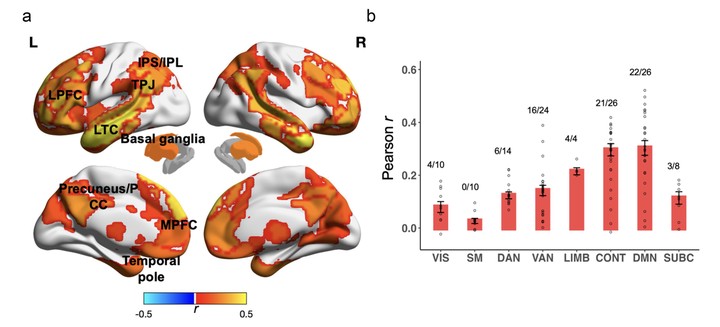

Functional near-infrared spectroscopy (fNIRS) offers a portable, cost-effective alternative to functional magnetic resonance imaging (fMRI) for noninvasively measuring neural activity. However, fNIRS measurements are limited to cortical regions near the scalp, missing important medial and deeper brain areas. We introduce a predictive model that maps prefrontal fNIRS signals to whole-brain fMRI activity during movie-watching. By aligning neural responses to a common audiovisual stimulus, our approach leverages shared dynamics across imaging modalities to map fNIRS signals to broader neural activity patterns. We scanned participants with fNIRS and utilized a publicly available fMRI dataset of participants watching the same TV episode. The model was trained on the frst half of the episode and tested on a held-out participant watching the second half to assess cross-individual and cross-stimulus generalizability. The model signifcantly predicted fMRI time courses in 66 out of 122 brain regions, including areas otherwise inaccessible to fNIRS. It also replicated intersubject functional connectivity patterns and retained semantic information about the movie content. The model generalized to an independent dataset from a different TV series, suggesting it captures robust cross-modal mappings across stimuli. Our publicly available models enable researchers to infer broader neural dynamics from localized fNIRS data during naturalistic tasks.